Implicit Depth Perception

Rethinking the boundary between dimensions: using 2D drawings to generate 3D objects.

Breaking Dimensional Boundaries

This experimental project considers the boundary between 2D drawings and 3D models, a boundary that a computer struggles with, yet a human considers virtually non-existent. How much information does a computer need to bring a 2D illustration to life, or to 3D-print a 2D sketch. And more importantly what's the easiest way of providing it with that information.

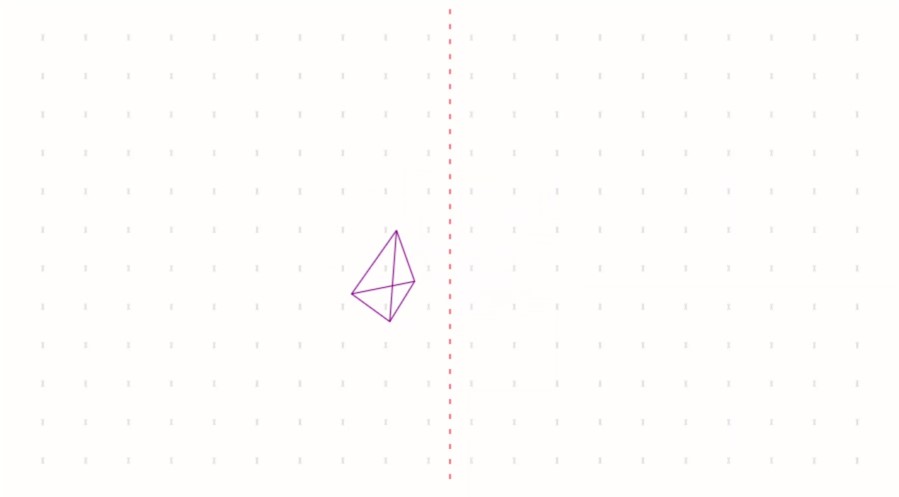

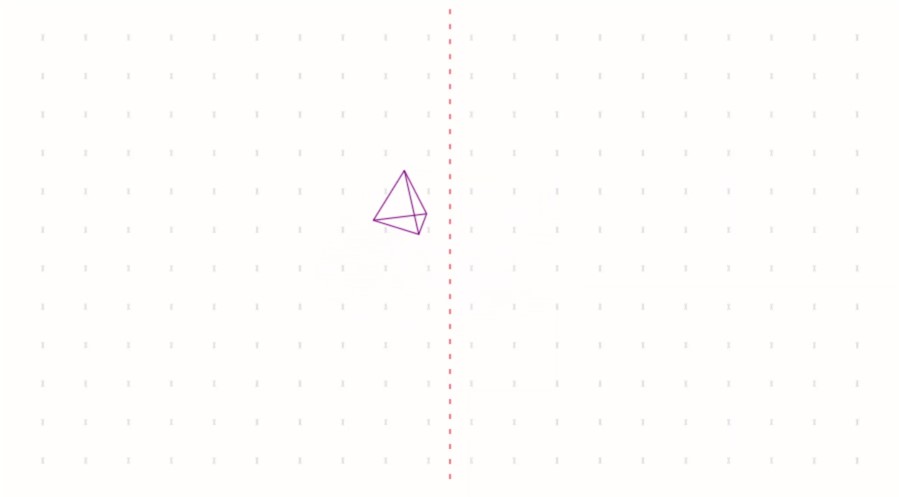

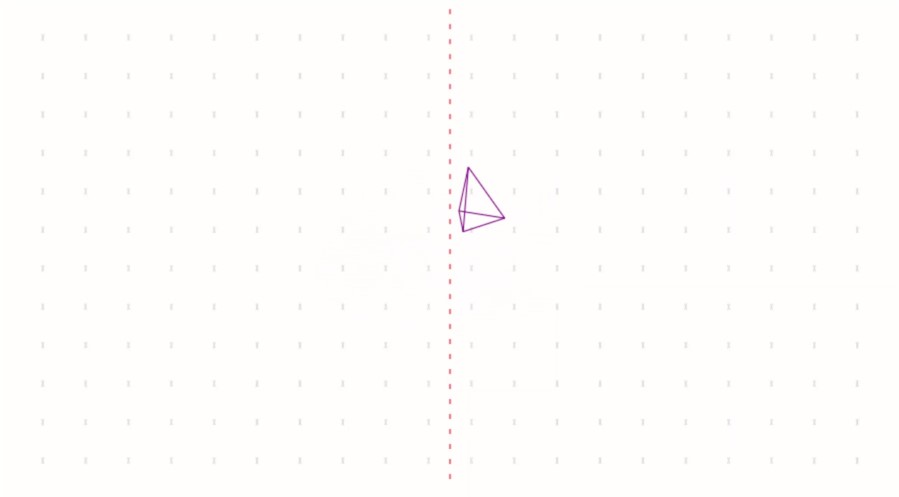

Demonstration

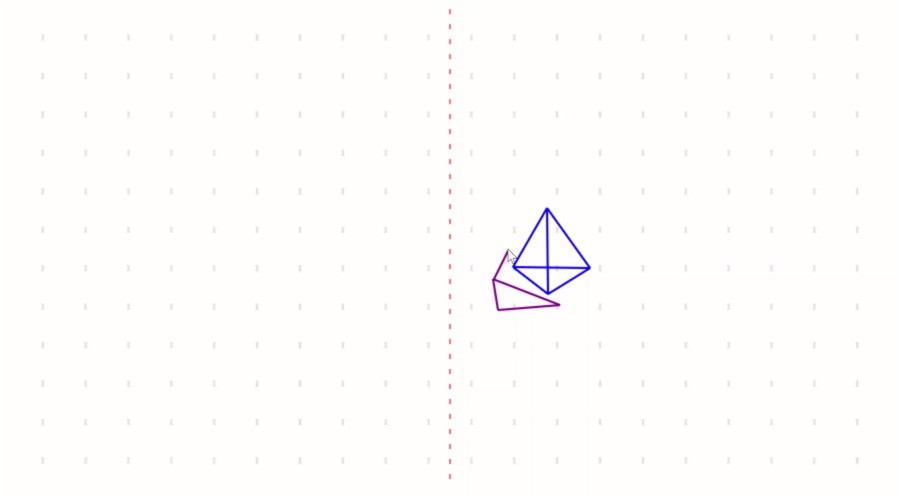

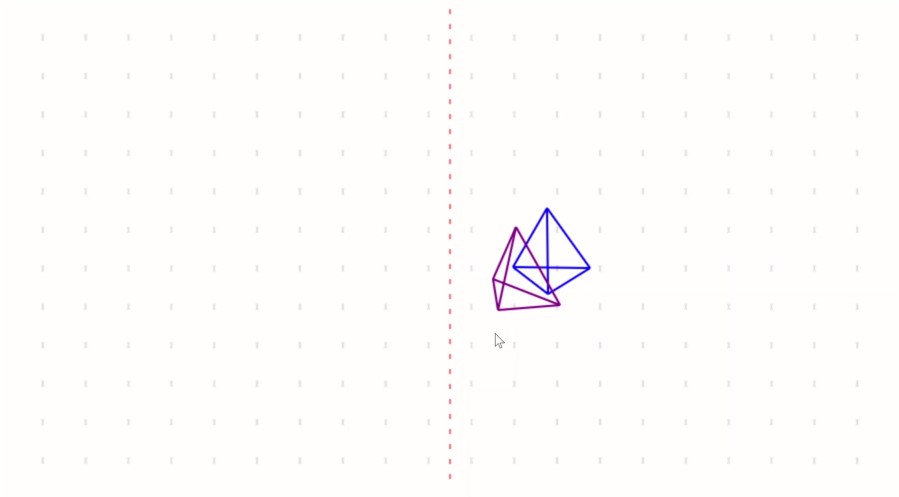

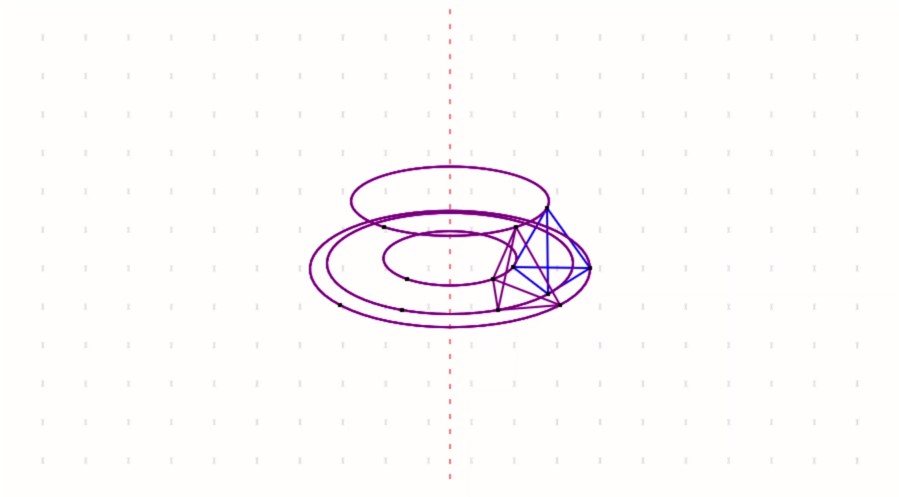

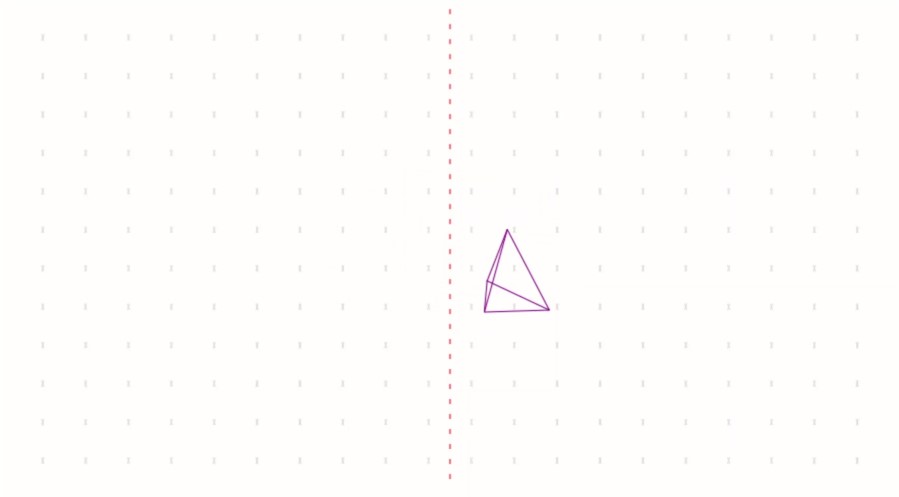

The user draws the same object [in this case a triangular-based pyramid] from two perspectives, the second perspective is a 45° rotation away from the first. The algorithm maps optimised distortion circles through the points, representing the path each vertex would travel if the object were rotated about the central axis, as viewed under 2-Point Perspective. The depth of each point is implicitly calculated, generating a 3D-model which is animated.

The Flaw in the Plan

If a human could draw an object from two perspectives with 100% accuracy, this program would work perfectly. Sadly, no human can. Although the optimisation process goes someway to averaging out human error, a more intelligent algorithm would be needed to make this prototype program useful. More importantly, it requires a second innovative user-interface for correcting the generated 3D-model. This is the stage that most machine-intelligence applications seem to overlook: the voice dictation on your phone works well, but correcting it when it makes a mistake is a different story.